Feature velocity is absolutely critical in today’s environment, however so is quality. An initial bad experience for your customer may result in them not coming back. Resolving the issue after the fact may be too late, as a first impression is difficult to change. What then do we do? You can choose to spend more time testing, but you reach a diminishing level of returns while remaining in the lab. There is no substitute for real world operational conditions. There is also no way to know a priori how customers will react to new features. Fortunately, there are a number of techniques you can use to release new features to the world with more confidence.

There is a saying that in order to speed up, you need to slow down. This is true in software development in that we want to control the rate at which users receive new features. If everyone gets a new release at once and there is a problem, you have affected all your customers. This results in a bad day for everyone. Instead, you want to rollout the feature slowly to flush out any issues that arise.

If issues do indeed exist, rapid rollback is essential. Even if only a small subset of customers experience issues with the new release, you want to minimize that impact quickly. These release strategies provide mechanisms to “rollback” faster than a typical software deployment.

In general, you want to release new versions and features slowly at first and then increase the speed as you gain confidence with the new software. Roll forward slowly, but always rollback quickly. By using the slower rollout, you will increase your overall feature velocity and customer satisfaction.

Controlling Rollout Rates with Deployments

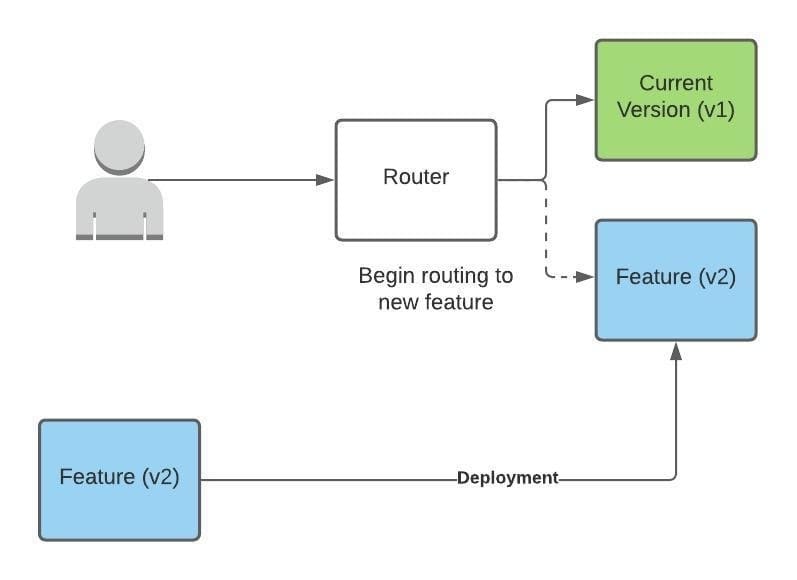

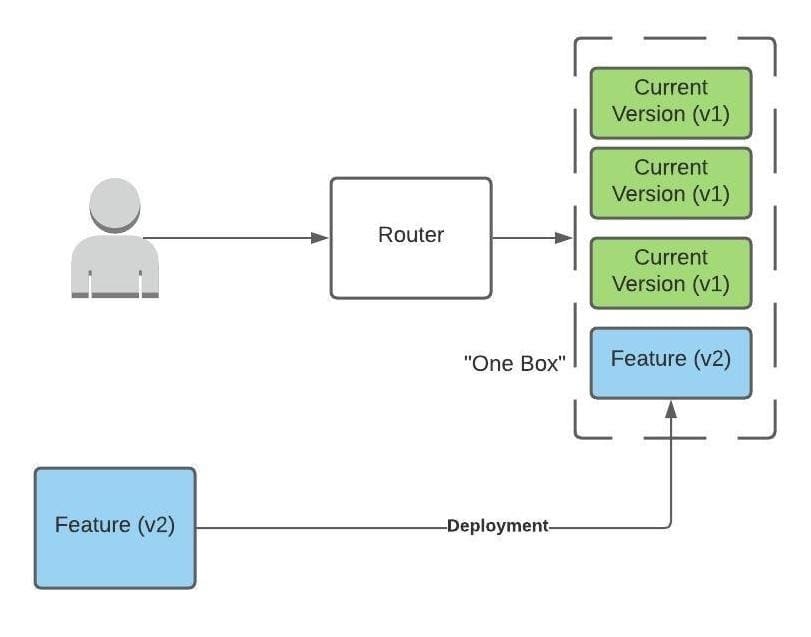

One approach is to control the rate at which servers in your fleet receive the new version. You can allow customer traffic to load balance across all the fleet, but only a percentage of the hosts will have the new feature. This process typically starts with a single host getting the update. This host is called the OneBox. You carefully monitor this one host and alarm if there are any problems. If that goes well, you proceed with a staged deployment continuing to the rest of the fleet.

This approach is in contrast to Blue/Green deploymentsIn this approach, you have the overhead of a second set of hosts, which you alternate as referring to the blue or green hosts. A deployment is completed to the alternate fleet and then traffic is routed to the new host. This strategy incurs infrastructure overhead with the benefit of having extremely fast roll forward and rollback times. However, the basic concept is an all-or-nothing approach. Once you switch over to the new fleet, any potential impact will be widespread. You rolled forward extremely fast, but remember this was not our goal. We want to roll forward at a slow, methodical pace initially.

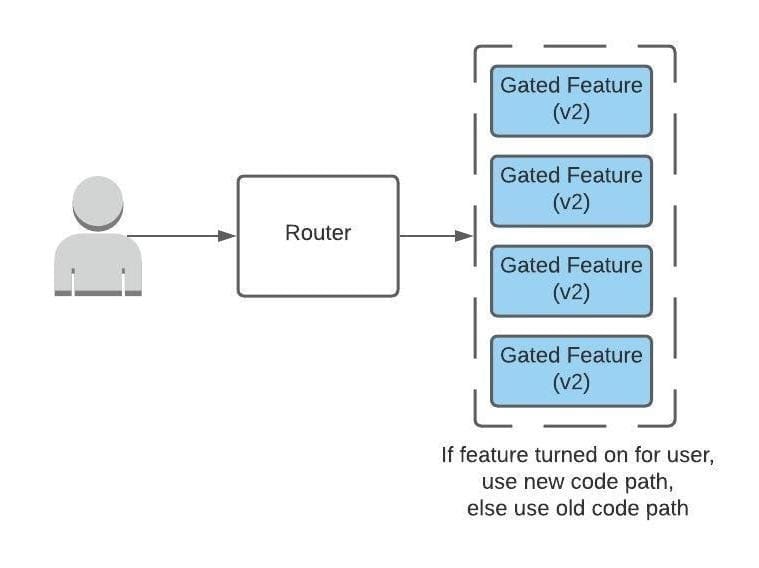

Controlling Rollout Rates with Feature Gates

A different philosophy is to put the new software onto the hosts in the fleet, but control which users get that new feature using a flag, or feature gate. This involves partitioning your users into bins, typically based on a hash value of a customer identifier or attribute. Using this technique, you can start by releasing your feature to say only 5% of your users. If that goes well, increase it to 10%, 30%, and so on until it is 100% released. At 100%, your feature is generally available.

This technique allows you to minimize the blast radius, or impact, of any bugs that might exist. If the new feature causes an issue, you want to find that out as quickly as possible while impacting the smallest amount of customers.

It does come at the cost of added complexity in the code. There will be multiple paths that must be maintained for some period of time. You do want to clean up the old code paths once features are GA as well, to reduce confusion and time spent later dealing with them.

Nonetheless, feature gates offer an extremely effective way to manage safe feature rollouts. They are also extremely helpful when the timing of database migrations and software deployments must be managed. If it is difficult or impossible to create a database schema that supports both versions, then this technique can be used to precisely control the timing of the cutover.

Ideally, the first production user to receive the gated feature is a canary account. Now, this canary user is created by your team and used in ongoing automated testing. Thus, the capabilities are exercised in production but they only impact your own account. This can catch a lot of environment-specific issues that otherwise would not have appeared in staging environments or system tests. However, the test coverage will be limited to the use cases covered by your manual testing or tests scripts using this account. Actual users have a tendency to do things you would not expect, but this technique is invaluable for knowing there is a problem before your customers do.

Never Be Afraid to Rollback

We have thus far talked about the speed at which we roll forward. On the flip side, it is critical that you can also safely rollback in a very quick manner. If you are about to undergo a software release, and you don’t know what your rollback procedure is, stop and go back to the planning step for your release.

The simplest form of rollback is to run another deployment, this time back to the original version of the code. However, this is fairly slow compared to other options. Some of the techniques we discussed use routing to quickly change what endpoint and accompanying version of the software handles a given request. Feature flags also provide a quick rollback mechanism because the flag is toggled back and immediately the path taken by the code changes from the new back to the old.

You should always feel comfortable with your rollback mechanism. If there is doubt about whether a release is causing issues or you see questionable data in your metrics, you should go ahead and rollback. Rolling back your release is not necessarily a bad thing. There should not be a feeling of failure, as you always learn something from the roll forward that you can use to improve things. You now have data in your logs and metrics that you can analyze to see the effect of the new code in production. The analysis could take longer than you think, so you don’t want any ongoing customer impact while this work is done. Hence, when in doubt, rollback. Having a nice automated solution to roll forward and rollback versions is critical so that you can do this with ease, speed, and confidence.

Let’s Not Forget About Database Changes

Database changes often become the most complicated part of a rollout. To the extent possible, you only want to make non-breaking changes to your database schema. This means you will only ever add tables, add columns, or make existing columns less restrictive. Sure, you may end up with a few tables that end up doing nothing, but adhering to this constraint allows you to roll forwards and backwards with ease.

Code defensively for your database schema changes as well. Assume you may not be able to backfill data for all new columns before the feature is turned on. Write your code so that the absence of a value is handled the same as the default value. Small things like this will help avoid any minor timing issues during your deployment between schema changes, backfills, and software rollouts.

Implementing Feature Gates in Rails Applications

A feature gate mechanism requires two primary capabilities:

- Storage and administration of the feature gate values

- Inline code checks to determine whether the feature is turned on for a given user

The Ruby gems rolloutand rollout-ui provides these capabilities for Rails apps. First, add both of them to your Gemfile, as well as mock_redis. Rollout uses Redis to store feature flag values and access them quickly at runtime. This is important given that each relevant request will need to refer to the value and simple caching would not be sufficient given our requirement to quickly rollback if need. Mock redis allows for easy development and testing in non-production environments.

gem 'rollout' gem 'rollout-ui' group :development, :test do gem 'mock_redis' end

The user interface for Rollout is added simply by including a mount in your routes file.

mount Rollout::UI::Web.new => '/admin/rollout'

Configure Rollout in your application.rb file.

unless Rails.env.production?

$redis = MockRedis.new

else

$redis = Redis.new

end

$rollout = Rollout.new($redis, logging: { history_length: 100, global: true })

Rollout::UI.configure do

instance { $rollout }

end

Using Rollout in your Application

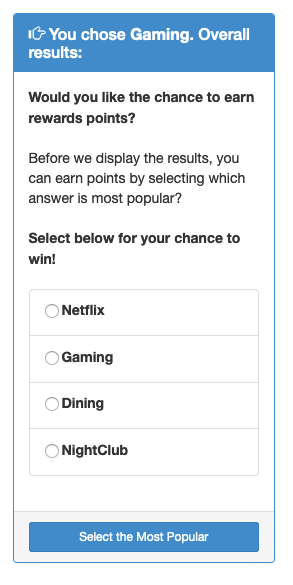

You are now ready to create a feature flag and use it in your code. I am going to enhance my Instant Poll application by adding a feature where users are prompted to guess what they think the most popular answer is. This could be different from their answer to a poll question. We will also give them the chance to earn rewards points by doing so.

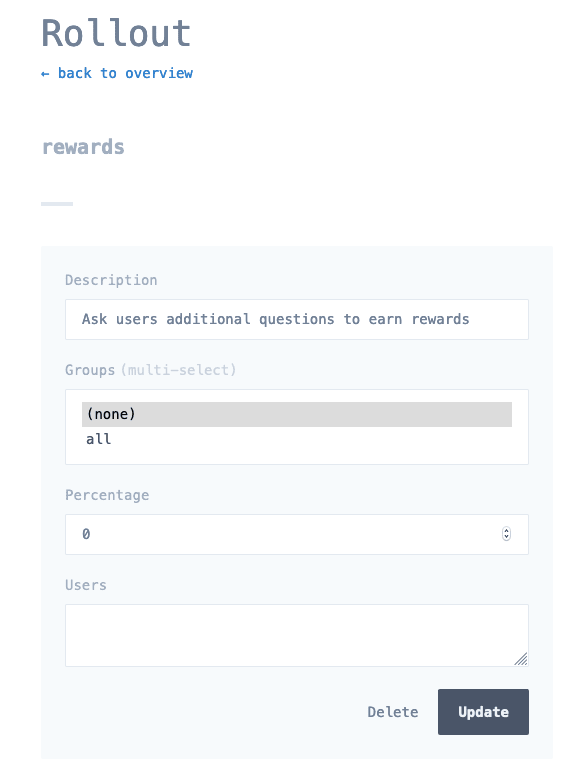

Create a named feature by browsing to <host>/admin/rollout. Here we create a feature called ‘rewards’.

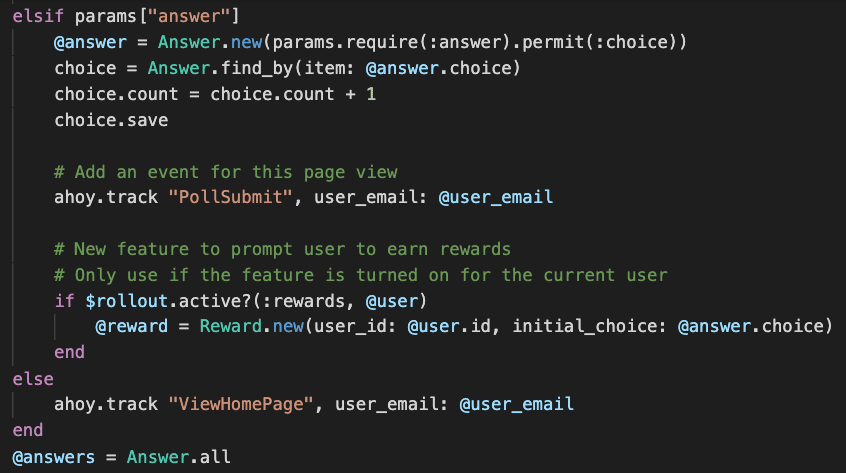

Next, we add a Reward model class to capture the additional user guess and associated rewards points. In our PollController, we will check the feature gate for the current user using the rollout.active? method. If the feature is turned on, instantiate a rewards object and put it in scope for the view component to adjust.

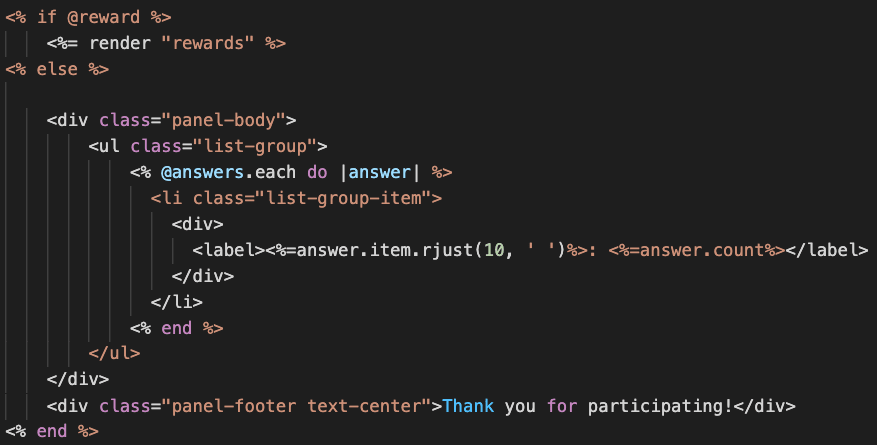

Then in the view component, we will display our additional rewards question.

That’s all there is to it! Once your feature is completely rolled out, or generally available, you can clean up the feature gate code leaving only the new path. Here is the result for a user where the feature is enabled.

Hope this helps and we look forward to seeing what new features you build!